DB-GPT是一个开源的AI原生数据应用开发框架(AI Native Data App Development framework with AWEL(Agentic Workflow Expression Language) and Agents)。

目的是构建大模型领域的基础设施,通过开发多模型管理(SMMF)、Text2SQL效果优化、RAG框架以及优化、Multi-Agents框架协作、AWEL(智能体工作流编排)等多种技术能力,让围绕数据库构建大模型应用更简单,更方便。

git

使用文档

这里使用的“阿里云人工智能平台 PAI”

PAI-DSW免费试用

GPU规格和镜像版本选择(参考的 “基于Wav2Lip+TPS-Motion-Model+CodeFormer技术实现动漫风数字人”):

参考:

下载源码

git clone https://github.com/eosphoros-ai/DB-GPT.git

(dbgpt_env)/mnt/workspace> du -sh DB-GPT/

658M DB-GPT/

(dbgpt_env)/mnt/workspace>

创建Python虚拟环境

conda create -n dbgpt_env python=3.10

conda activate dbgpt_env

# it will take some minutes

pip install -e ".[default]"

复制环境变量

(dbgpt_env)/mnt/workspace> cd DB-GPT/

cp.env.template .env

cd DB-GPT

mkdir models and cd models

# 请确保 lfs 已经被正确安装(如果没有安装,后面使用Git下载的模型可能不是完整数据,使用du -sh *可以查看下载下来的文件夹大小,这里可以查看真实大小https://www.modelscope.cn/models/Jerry0/text2vec-large-chinese/files)(dbgpt_env)/mnt/workspace> curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash

(dbgpt_env)/mnt/workspace> git lfs install

Git LFS initialized.(dbgpt_env)/mnt/workspace>

#### embedding model

git clone https://www.modelscope.cn/Jerry0/text2vec-large-chinese.git

#### llm model, if you use openai or Azure or tongyi llm api service, you don't need to download llm model

git clone https://www.modelscope.cn/ZhipuAI/glm-4-9b-chat.git

(dbgpt_env)/mnt/workspace/DB-GPT/models> du -sh *

36G glm-4-9b-chat

4.9G text2vec-large-chinese

(dbgpt_env)/mnt/workspace/DB-GPT/models>

运行后报错

(dbgpt_env)/mnt/workspace/DB-GPT> python dbgpt/app/dbgpt_server.py

...(Background on this error at: https://sqlalche.me/e/20/e3q8)

2024-09-13 13:11:52 dsw-131579-6b95d86495-6hjv4 dbgpt.serve.agent.db.gpts_app[1865] ERROR create chat_knowledge_app error: (sqlite3.OperationalError) no such table: gpts_app

[SQL: DELETE FROM gpts_app WHERE gpts_app.team_mode = ? AND gpts_app.app_code = ?][parameters: ('native_app','chat_knowledge')](Background on this error at: https://sqlalche.me/e/20/e3q8)

Traceback (most recent call last):

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/sqlalchemy/engine/base.py", line 1970, in _exec_single_context

self.dialect.do_execute(

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/sqlalchemy/engine/default.py", line 924, in do_execute

cursor.execute(statement, parameters)

sqlite3.OperationalError: no such table: gpts_app

...

2024-09-13 13:11:57 dsw-131579-6b95d86495-6hjv4 dbgpt.core.awel.dag.loader[1865] ERROR Failed to import: /mnt/workspace/DB-GPT/examples/awel/simple_rag_summary_example.py, error message: Traceback (most recent call last):

File "/mnt/workspace/DB-GPT/dbgpt/model/proxy/llms/chatgpt.py", line 94, in __init__

import openai

ModuleNotFoundError: No module named 'openai'

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/mnt/workspace/DB-GPT/dbgpt/core/awel/dag/loader.py", line 91, in parse

loader.exec_module(new_module)

File "<frozen importlib._bootstrap_external>", line 883, in exec_module

File "<frozen importlib._bootstrap>", line 241, in _call_with_frames_removed

File "/mnt/workspace/DB-GPT/examples/awel/simple_rag_summary_example.py", line 64, in <module>

llm_client=OpenAILLMClient(), language="en"

File "/mnt/workspace/DB-GPT/dbgpt/model/proxy/llms/chatgpt.py", line 96, in __init__

raise ValueError(

ValueError: Could not import python package: openai Please install openai by command `pip install openai

....

安装openai相关依赖

(dbgpt_env)/mnt/workspace/DB-GPT> pip install -e ".[openai]"

再次运行,日志里没有明显的报错,但是每次加载到80%的时候就打印“Killed”,然后程序退出了

2024-09-13 15:24:34 dsw-131579-bf84bc946-jmgg7 dbgpt.model.adapter.hf_adapter[1763] INFO Load model from/mnt/workspace/DB-GPT/models/glm-4-9b-chat, from_pretrained_kwargs: {'torch_dtype': torch.float32}

done

Model Unified Deployment Mode!

^MLoading checkpoint shards: 0

Loading checkpoint shards: 80%|██████████████████████████████████████████████████████████████████████████████████████▍ | 8/10 【01:24<00:21, 10.57s/it】Killed

这个也是“Killed”,没有明显的报错,看起来可能是同一个原因,即显存不够,或者说是模型有问题?…

安装一个对显存要求较低的模型(主要是换一个模型试试,默认的配置都是使用cpu,没有显存)

参考

(dbgpt_env)/mnt/workspace/DB-GPT/models> git clone https://www.modelscope.cn/ZhipuAI/chatglm2-6b.git

(dbgpt_env)/mnt/workspace/DB-GPT> vi .env

#LLM_MODEL=glm-4-9b-chat

LLM_MODEL=chatglm2-6b

(dbgpt_env)/mnt/workspace/DB-GPT> nohup python dbgpt/app/dbgpt_server.py >> logs/log.3 &

页面可能持续访问了,没有中途挂掉

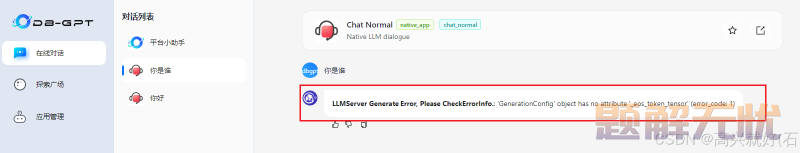

但是问答的时候有报错

日志

# 启动程序后台打印的日志(dbgpt_env)/mnt/workspace/DB-GPT> vi logs/log.3

...

2024-09-13 16:26:15 dsw-131579-bf84bc946-jmgg7 dbgpt.model.adapter.base[11079] INFO Message version is v2

2024-09-13 16:26:15 dsw-131579-bf84bc946-jmgg7 dbgpt.model.cluster.worker.default_worker[11079] ERROR Model inference error, detail: Traceback (most recent call last):

File "/mnt/workspace/DB-GPT/dbgpt/model/cluster/worker/default_worker.py", line 160, in generate_stream

for output in generate_stream_func(

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 35, in generator_context

response = gen.send(None)

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/fastchat/model/model_chatglm.py", line 106, in generate_stream_chatglm

for total_ids in model.stream_generate(**inputs,**gen_kwargs):

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 35, in generator_context

response = gen.send(None)

File "/root/.cache/huggingface/modules/transformers_modules/chatglm2-6b/modeling_chatglm.py", line 1124, in stream_generate

logits_processor = self._get_logits_processor(

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/transformers/generation/utils.py", line 866, in _get_logits_processor

and generation_config._eos_token_tensor is not None

AttributeError: 'GenerationConfig' object has no attribute '_eos_token_tensor'

llm_adapter: FastChatLLMModelAdapterWrapper(fastchat.model.model_adapter.ChatGLMAdapter)

model prompt:

You are a helpful AI assistant.[Round 1]

问:你是谁

答:

stream output:

INFO: 10.224.166.224:0 -"GET /api/v1/chat/dialogue/list HTTP/1.1" 200 OK

# webserver 日志(dbgpt_env)/mnt/workspace/DB-GPT> vi logs/dbgpt_webserver.log

...

2024-09-13 16:26:15 | ERROR | dbgpt.model.cluster.worker.default_worker | Model inference error, detail: Traceback (most recent call last):

File "/mnt/workspace/DB-GPT/dbgpt/model/cluster/worker/default_worker.py", line 160, in generate_stream

for output in generate_stream_func(

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 35, in generator_context

response = gen.send(None)

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/fastchat/model/model_chatglm.py", line 106, in generate_stream_chatglm

for total_ids in model.stream_generate(**inputs,**gen_kwargs):

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/torch/utils/_contextlib.py", line 35, in generator_context

response = gen.send(None)

File "/root/.cache/huggingface/modules/transformers_modules/chatglm2-6b/modeling_chatglm.py", line 1124, in stream_generate

logits_processor = self._get_logits_processor(

File "/home/pai/envs/dbgpt_env/lib/python3.10/site-packages/transformers/generation/utils.py", line 866, in _get_logits_processor

and generation_config._eos_token_tensor is not None

AttributeError: 'GenerationConfig' object has no attribute '_eos_token_tensor'

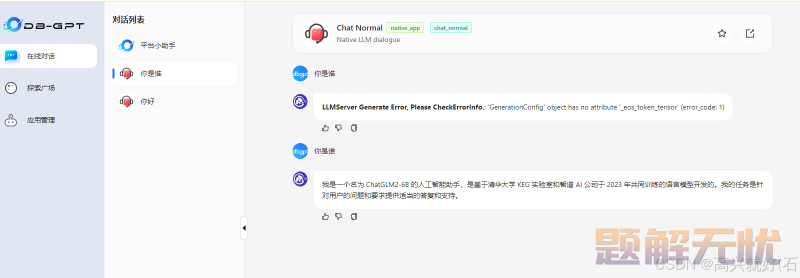

看起来可能是transformers版本不兼容,需要降级

https://github.com/THUDM/ChatGLM3/issues/1299

https://github.com/xorbitsai/inference/issues/1962

#查看版本(dbgpt_env)/mnt/workspace/DB-GPT> python

>>> import transformers

>>> print(transformers.__version__)

4.44.2

>>>

# 将 `transformers` 降级到特定版本,如 4.40.2,=(dbgpt_env)/mnt/workspace/DB-GPT> pip install transformers==4.40.2

(dbgpt_env)/mnt/workspace/DB-GPT> python

>>> import transformers

>>> print(transformers.__version__)

4.40.2

>>>

重启服务

# kill old(dbgpt_env)/mnt/workspace/DB-GPT> ps-aux | grep dbgpt_server.py

root 11079 2.5 76.6 33327856 25252220 pts/1 Sl 16:15 0:44 python dbgpt/app/dbgpt_server.py

root 16527 0.0 0.0 9356 428 pts/1 S+ 16:44 0:00 grep dbgpt_server.py

(dbgpt_env)/mnt/workspace/DB-GPT> kill 11079

(dbgpt_env)/mnt/workspace/DB-GPT> ps-aux | grep dbgpt_server.py

root 11079 2.5 2.6 8939816 864528 pts/1 Sl 16:15 0:46 python dbgpt/app/dbgpt_server.py

root 16566 0.0 0.0 9356 404 pts/1 S+ 16:45 0:00 grep dbgpt_server.py

(dbgpt_env)/mnt/workspace/DB-GPT> kill-9 11079

bash: kill: (11079)- No such process[1] Terminated nohup python dbgpt/app/dbgpt_server.py >> logs/log.3

(dbgpt_env)/mnt/workspace/DB-GPT> ps-aux | grep dbgpt_server.py

root 16593 0.0 0.0 9356 420 pts/1 S+ 16:45 0:00 grep dbgpt_server.py

(dbgpt_env)/mnt/workspace/DB-GPT>

#重新启动(dbgpt_env)/mnt/workspace/DB-GPT> nohup python dbgpt/app/dbgpt_server.py >> logs/log.4 &

[4] 16769

(dbgpt_env)/mnt/workspace/DB-GPT> nohup: ignoring input and redirecting stderr to stdout

(dbgpt_env)/mnt/workspace/DB-GPT>

(dbgpt_env)/mnt/workspace/DB-GPT> ps-aux | grep dbgpt_server.py

root 16769 125 1.4 3651616 469864 pts/1 Rl 16:46 0:03 python dbgpt/app/dbgpt_server.py

root 16790 0.0 0.0 9356 396 pts/1 S+ 16:46 0:00 grep dbgpt_server.py

(dbgpt_env)/mnt/workspace/DB-GPT>

看起来正常了,就是反应非常慢,由于是使用的cpu而不是gpu

后台日志

(dbgpt_env)/mnt/workspace/DB-GPT> tail -f logs/log.4

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.app.openapi.api_v1.api_v1[16769] INFO get_chat_instance:conv_uid='d779bfc4-71a9-11ef-9627-00163e369829' user_input='你是谁' user_name='001' chat_mode='chat_normal' app_code='' temperature=0.5 select_param='' model_name='chatglm2-6b' incremental=False sys_code=None ext_info={}

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.runner.local_runner[16769] INFO Begin run workflowfromend operator, id: 04408d54-05ee-48e8-8b89-feb3188cb7b6, runner: <dbgpt.core.awel.runner.local_runner.DefaultWorkflowRunner object at 0x7f6473ac6e30>

Get prompt template of scene_name: chat_normal with model_name: chatglm2-6b, proxyllm_backend: None, language: en

INFO: 10.224.166.224:0 -"POST /api/v1/chat/completions HTTP/1.1" 200 OK

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.runner.local_runner[16769] INFO Begin run workflowfromend operator, id: 98350a3c-ae96-4ecc-95d3-404b6d07a242, runner: <dbgpt.core.awel.runner.local_runner.DefaultWorkflowRunner object at 0x7f6473ac6e30>

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.app.scene.base_chat[16769] INFO payload request:

ModelRequest(model='chatglm2-6b', messages=[ModelMessage(role='system', content='You are a helpful AI assistant.', round_index=0), ModelMessage(role='human', content='你是谁', round_index=1), ModelMessage(role='ai', content="**LLMServer Generate Error, Please CheckErrorInfo.**: 'GenerationConfig' object has no attribute '_eos_token_tensor' (error_code: 1)", round_index=1), ModelMessage(role='human', content='你是谁', round_index=0)], temperature=0.6, top_p=None, max_new_tokens=1024, stop=None, stop_token_ids=None, context_len=None,echo=False, span_id='ed41b29c5e3db233992195daae98350f:fe33898361e7076c', context=ModelRequestContext(stream=True, cache_enable=False, user_name='001', sys_code=None, conv_uid=None, span_id='ed41b29c5e3db233992195daae98350f:fe33898361e7076c', chat_mode='chat_normal', chat_param=None, extra={}, request_id=None))

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.runner.local_runner[16769] INFO Begin run workflowfromend operator, id: 4b224d04-564d-4267-910d-8e66ebb560e8, runner: <dbgpt.core.awel.runner.local_runner.DefaultWorkflowRunner object at 0x7f6473ac6e30>

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.operators.common_operator[16769] INFO branch_input_ctxs 0 result None, is_empty: False

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.operators.common_operator[16769] INFO Skip node name llm_model_cache_node

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.operators.common_operator[16769] INFO branch_input_ctxs 1 result True, is_empty: False

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.core.awel.runner.local_runner[16769] INFO Skip node name llm_model_cache_node, node id 26f2c266-8283-4d56-8feb-4df7ee5e2d70

2024-09-13 17:40:20 dsw-131579-bf84bc946-jmgg7 dbgpt.model.adapter.base[16769] INFO Message version is v2

llm_adapter: FastChatLLMModelAdapterWrapper(fastchat.model.model_adapter.ChatGLMAdapter)

model prompt:

You are a helpful AI assistant.[Round 1]

问:你是谁

答:**LLMServer Generate Error, Please CheckErrorInfo.**: 'GenerationConfig' object has no attribute '_eos_token_tensor'(error_code: 1)[Round 2]

问:你是谁

答:

stream output:

我2024-09-13 17:40:26 dsw-131579-bf84bc946-jmgg7 dbgpt.model.cluster.worker.default_worker[16769] INFO is_first_generate, usage: {'prompt_tokens': 85,'completion_tokens': 1,'total_tokens': 86}

2024-09-13 17:40:26 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

是一个2024-09-13 17:40:27 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

名为2024-09-13 17:40:29 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

Chat2024-09-13 17:40:30 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

GL2024-09-13 17:40:31 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

M2024-09-13 17:40:32 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

22024-09-13 17:40:33 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.-2024-09-13 17:40:34 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

62024-09-13 17:40:35 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

B2024-09-13 17:40:36 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

2024-09-13 17:40:38 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

的人工2024-09-13 17:40:39 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

智能2024-09-13 17:40:40 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

助手2024-09-13 17:40:41 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

,2024-09-13 17:40:42 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

是基于2024-09-13 17:40:43 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

清华大学2024-09-13 17:40:44 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

KE2024-09-13 17:40:45 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

G2024-09-13 17:40:46 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

2024-09-13 17:40:46 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

实验室2024-09-13 17:40:47 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

和2024-09-13 17:40:49 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

智2024-09-13 17:40:50 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

谱2024-09-13 17:40:51 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

AI2024-09-13 17:40:52 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

公司2024-09-13 17:40:53 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

于2024-09-13 17:40:55 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

2024-09-13 17:40:56 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

22024-09-13 17:40:57 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

02024-09-13 17:40:58 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

22024-09-13 17:40:59 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

32024-09-13 17:41:01 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

年2024-09-13 17:41:02 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

共同2024-09-13 17:41:03 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

训练2024-09-13 17:41:04 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

的语言2024-09-13 17:41:05 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

模型2024-09-13 17:41:06 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

开发的2024-09-13 17:41:07 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

。2024-09-13 17:41:08 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

我的2024-09-13 17:41:09 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

任务2024-09-13 17:41:10 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

是2024-09-13 17:41:11 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

针对2024-09-13 17:41:13 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

用户2024-09-13 17:41:14 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

的问题2024-09-13 17:41:15 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

和要求2024-09-13 17:41:16 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

提供2024-09-13 17:41:18 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

适当的2024-09-13 17:41:19 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

答复2024-09-13 17:41:20 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

和支持2024-09-13 17:41:21 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

。2024-09-13 17:41:22 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

2024-09-13 17:41:23 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

2024-09-13 17:41:23 dsw-131579-bf84bc946-jmgg7 dbgpt.model.cluster.worker.default_worker[16769] INFO finish_reason: stop

2024-09-13 17:41:23 dsw-131579-bf84bc946-jmgg7 dbgpt.util.model_utils[16769] WARNING CUDA is not available.

full stream output:

我是一个名为 ChatGLM2-6B 的人工智能助手,是基于清华大学 KEG 实验室和智谱 AI 公司于 2023 年共同训练的语言模型开发的。我的任务是针对用户的问题和要求提供适当的答复和支持。

model generate_stream params:

{'model': 'chatglm2-6b','messages': [ModelMessage(role='system', content='You are a helpful AI assistant.', round_index=0), ModelMessage(role='human', content='你是谁', round_index=1), ModelMessage(role='ai', content="**LLMServer Generate Error, Please CheckErrorInfo.**: 'GenerationConfig' object has no attribute '_eos_token_tensor' (error_code: 1)", round_index=1), ModelMessage(role='human', content='你是谁', round_index=0)],'temperature': 0.6,'max_new_tokens': 1024,'echo': False,'span_id': 'ed41b29c5e3db233992195daae98350f:110cab04d2a06afe','context': {'stream': True,'cache_enable': False,'user_name': '001','sys_code': None,'conv_uid': None,'span_id': 'ed41b29c5e3db233992195daae98350f:fe33898361e7076c','chat_mode': 'chat_normal','chat_param': None,'extra': {},'request_id': None},'convert_to_compatible_format': False,'string_prompt': "system: You are a helpful AI assistant.\nhuman: 你是谁\nai: **LLMServer Generate Error, Please CheckErrorInfo.**: 'GenerationConfig' object has no attribute '_eos_token_tensor' (error_code: 1)\nhuman: 你是谁",'prompt': "You are a helpful AI assistant.\n\n[Round 1]\n\n问:你是谁\n\n答:**LLMServer Generate Error, Please CheckErrorInfo.**: 'GenerationConfig' object has no attribute '_eos_token_tensor' (error_code: 1)\n\n[Round 2]\n\n问:你是谁\n\n答:",'stop': None,'stop_token_ids': None}

调整成gpu运行

更换大模型

体验其他功能

…

提示:请勿发布广告垃圾评论,否则封号处理!!